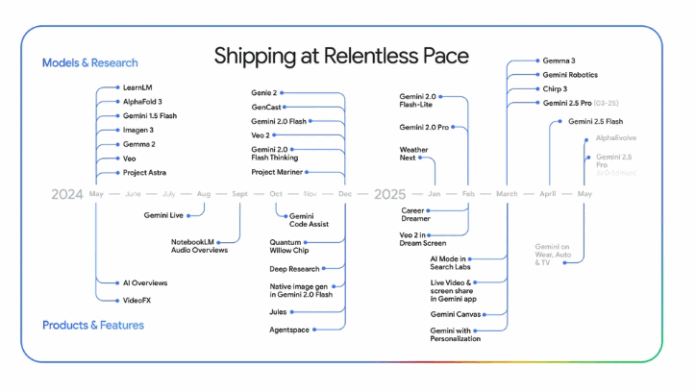

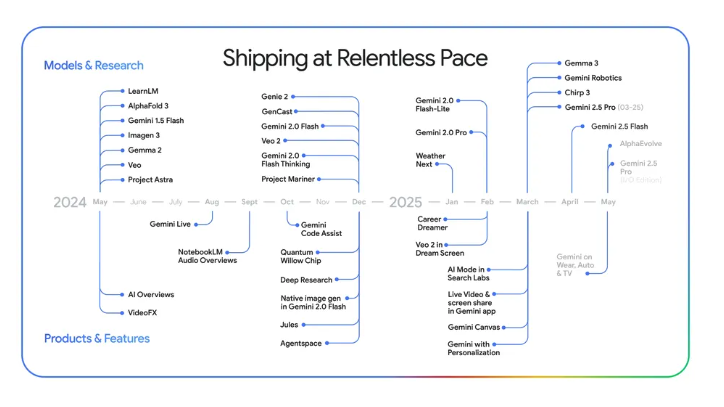

After three hours, it became increasingly evident at Google’s I/O 2025 function in Silicon Valley that the company is focusing solely on rallying its fierce AI efforts, which are strongly branded under the Gemini brand but cover a wide range of underlying type architectures and research. It is releasing a kills of innovations and technology around it, next integrating them into merchandise at a spectacular rate.

Beyond its headline-grabbing features, Google made a stronger goal: creating an operating system for the AI era, not the disk-booting type, but a logic layer that every app may use. It is a “world model” designed to power a general assistant that comprehends our natural surroundings, understands our motivations, and acts on our behalf. It’s a strategic offensive that some observers may have missed amid the bamboozlement of functions.  ,

On one hand, it’s a high-stakes approach to leap rooted companies. On the other hand, as Google invests billions in this launchpad, a crucial question arises: Can Google’s expertise in AI research and technology produce products more quickly than its rivals, whose advantage is in packaging AI into quickly usable and commercially potent products? Is Google out-maneuver a laser-focused Microsoft, ward off OpenAI’s horizontal hardware dreams, and, crucially, maintain its own research empire dead in the destructive currents of AI?

This futuristic endeavor is already being pursued by Google on a grand level. Pichai told I/O that the company then processes 480 trillion currencies a fortnight – 50× more than a year ago – and almost 5x more than the 100 trillion currencies a quarter that Microsoft’s Satya Nadella said his firm processed. Developer implementation is also reflected in this momentum, with Pichai reporting that over 7 million designers are using the Gemini API, which is a five-fold increase over the previous I/O, and that Vertex AI usage has increased by more than 40 percent. And product prices keep falling as Gemini 2.5 designs and the Ironwood TPU press more efficiency from each watt and money. Google tunes latency, quality, and future ad formats as it transitions search into an AI-first era through its live test beds, which are AI Mode ( rolling out in the U.S. ) and AI Overviews ( already serving 1.5 billion users monthly ).

Another significant conflict arises when Google doubles down on what it calls” a planet design,” an AI that it hopes to imbue with a thorough understanding of real-world relationships, and with it a vision for a general assistant powered by Google, and not other businesses. Does it primarily want to leverage it first for itself, to save its$ 200 billion search business that depends on owning the starting point and avoiding disruption by OpenAI? Or will Google fully release its foundational AI to other developers and businesses, which includes another, a significant portion of its business, which engages more than 20 million developers, than any other company?  ,

It has occasionally fallen short of putting a serious emphasis on creating core products for others with the same clarity as Microsoft, its archrival. That’s because it keeps a lot of core functionality reserved for its cherished search engine. Despite this, Google is making significant efforts to give developers as much access as possible. A telling example is Project Mariner. Google might have controlled Chrome’s agentic browser automation features, giving users a direct opportunity to showcase their products in its own hands. However, Google followed up by saying Mariner’s computer-use capabilities would be released via the Gemini API more broadly” this summer”. This indicates that any rival that wants comparable automation will soon have external access. In fact, Google said partners Automation Anywhere and UiPath were already building with it.

The “world model” and universal assistant are Google’s grand designs.

The clearest articulation of Google’s grand design came from Demis Hassabis, CEO of Google DeepMind, during the I/O keynote. He claimed that Google was working “double down” on AGI projects. While Gemini was already” the best multimodal model”, Hassabis explained, Google is working hard to “extend it to become what we call a world model. Similar to the brain, that model can simulate various aspects of the world and make plans and envision new experiences.

This concept of’ a world model,’ as articulated by Hassabis, is about creating AI that learns the underlying principles of how the world works – simulating cause and effect, understanding intuitive physics, and ultimately learning by observing, much like a human does. Google DeepMind’s work on models like Genie 2 is a promising early sign of this direction, though those who aren’t familiar with fundamental AI research may be hesitant to do so. This research shows how to generate interactive, two-dimensional game environments and playable worlds from varied prompts like images or text. It provides an insight into an AI that can model and interpret dynamic systems.

Hassabis has developed this concept of a “world model” and its manifestation as a “universal AI assistant” in several talks since late 2024, and it was presented at I/O most comprehensively – with CEO Sundar Pichai and Gemini lead Josh Woodward echoing the vision on the same stage. While other AI leaders, including Satya Nadella from Microsoft, Sam Altman from OpenAI, and Elon Musk from xAI, have all discussed “world models,” Google uniquely and most comprehensively ties this fundamental idea to its near-term strategic thrust, the “universal AI assistant.”

Speaking about the Gemini app, Google’s equivalent to OpenAI’s ChatGPT, Hassabis declared”, This is our ultimate vision for the Gemini app, to transform it into a universal AI assistant, an AI that’s personal, proactive, and powerful, and one of our key milestones on the road to AGI. ”  ,

This vision was made tangible through I/O demonstrations. A new app called Flow, a drag-and-drop filmmaking canvas that preserves character and camera consistency, was demoed by Google. It makes use of Veo 3, the new framework that combines physics-aware video and native audio. To Hassabis, that pairing is early proof that’ world-model understanding is already leaking into creative tooling.’ He also cited the fine-tuned Gemini Robotics model in terms of robotics, arguing that” AI systems will need world models to operate effectively.”

CEO Sundar Pichai reinforced this, citing Project Astra which “explores the future capabilities of a universal AI assistant that can understand the world around you”. These features, such as screen sharing and live video comprehension, are now integrated into Gemini Live. Josh Woodward, who leads Google Labs and the Gemini App, detailed the app’s goal to be the “most personal, proactive, and powerful AI assistant”. He demonstrated how “personal context” ( connecting search history and soon Gmail/Calendar ) allows Gemini to anticipate needs, such as creating personalized exam quizzes or explainer videos using analogies that a user is familiar with ( for instance, thermodynamics explained via cycling ). This, Woodward emphasized, is “where we’re headed with Gemini”, enabled by the Gemini 2.5 Pro model allowing users to” think things into existence” . ,

The new developer tools that were unveiled at I/O are building blocks. Gemini 2.5 Pro with” Deep Think” and the hyper-efficient 2.5 Flash ( now with native audio and URL context grounding from Gemini API ) form the core intelligence. Google also quietly demonstrated Gemini Diffusion, indicating that it will switch from pure Transformer stacks when it finds better efficiency or latency. Google is stuffing these capabilities into a crowded toolkit: AI Studio and Firebase Studio are core starting points for developers, while Vertex AI remains the enterprise on-ramp.

In a battle of AI weapons, the strategic stakes are: defending search and courting developers.

This colossal undertaking is driven by Google’s massive R&, D capabilities but also by strategic necessity. A Fortune 500 Chief AI Officer told VentureBeat that Microsoft has a strong hold in the enterprise software market, reassuring customers with its unwavering support for tooling Copilot. The executive requested anonymity because of the sensitivity of commenting on the intense competition between the AI cloud providers. Microsoft’s dominance in Office 365 productivity applications will be particularly difficult to shake, the executive said.

Google’s path to potential leadership – its “end-run” around Microsoft’s enterprise hold – lies in redefining the game with a fundamentally superior, AI-native interaction paradigm. If Google creates a truly “universal AI assistant” powered by a comprehensive world model, it could serve as the foundation for how users and businesses interact with technology. As Pichai mused with podcaster David Friedberg shortly before I/O, that means awareness of physical surroundings. And so, Pichai said, “maybe that’s the next leap… that’s what’s exciting for me,” and so AR glasses.

But this AI offensive is a race against multiple clocks. First, the$ 200 billion search engine that generates revenue for Google must be safeguarded even as it reinvents itself. The U. S. Department of Justice’s monopolization ruling still hangs over Google – divestiture of Chrome has been floated as the leading remedy. Additionally, the Digital Markets Act and any new copyright-liability lawsuits could hem in how freely Gemini crawls or displays the open web in Europe.

Finally, execution speed matters. Google has received criticism for moving too slowly in the past. But over the past 12 months, it became clear Google had been working patiently on multiple fronts, and that it has paid off with faster growth than rivals. The difficulty of successfully navigating this AI transition on a large scale is enormous, as demonstrated by a recent Bloomberg report showing how even a tech titan like Apple is dealing with significant setbacks and internal restructuring in its AI initiatives. This industry-wide difficulty underscores the high stakes for all players. The long list of enterprise customer testimonials Google displayed at its Cloud Next event last month, which includes actual AI deployments, highlights a leader who lets sustained product cadence and enterprise victories speak for themselves. Pichai lacks the showmanship of some rivals.  ,

At the same time, more focused competitors emerge. Microsoft’s enterprise march continues. Microsoft 365 Copilot was referred to as the “UI for AI,” Azure AI Foundry as a “production line for intelligence,” and Copilot Studio as a “production line for intelligence,” along with impressive low-code workflow demos ( Microsoft Build Keynote, Miti Joshi at 22: 52, Kadesha Kerr at 51: 26 ). Nadella’s “open agentic web” vision ( NL Web, MCP ) offers businesses a pragmatic AI adoption path, allowing selective integration of AI tech– whether it be Google’s or another competitor’s – within a Microsoft-centric framework.

In contrast, OpenAI is far ahead in terms of the reach of its ChatGPT product to the general public, with recent statements from the company mentioning 600 million monthly users and 800 million weekly users. This compares to the Gemini app’s 400 million monthly users. Additionally, OpenAI reported that it plans to launch an ad offering in December, which could pose an existential threat to Google’s search strategy. Beyond making leading models, OpenAI is making a provocative vertical play with its reported$ 6.5 billion acquisition of Jony Ive’s IO, pledging to move “beyond these legacy products” – and hinting that it was launching a hardware product that would attempt to disrupt AI just like the iPhone disrupted mobile. It’s true that OpenAI’s ability to create a deep moat like Apple did with the iPhone may be limited in an AI era increasingly defined by open protocols ( like MCP ) and easier model interchangeability, but any of this may potentially undermine Google’s next-gen personal computing ambitions.

Internally, Google navigates its vast ecosystem. As Jeanine Banks, Google’s VP of Developer X, stated on VentureBeat that” serving Google’s diverse global developer community means “it’s not a one size fits all,” which results in a wealth of tools, including AI Studio, Vertex AI, Firebase Studio, and numerous APIs.

Meanwhile, Amazon is pressing from another flank: Bedrock already hosts Anthropic, Meta, Mistral and Cohere models, giving AWS customers a pragmatic, multi-model default.

For business decision-makers: navigating Google’s “world model” future

Google’s audacious bid to build the foundational intelligence for the AI age presents enterprise leaders with compelling opportunities and critical considerations:

- When assistant-first interfaces become default, should you move now or retrofit later? A release cycle gap could result in time-consuming rewrites.

- Tap into revolutionary potential: For organizations seeking to embrace the most powerful AI, leveraging Google’s “world model” research, multimodal capabilities ( like Veo 3 and Imagen 4 showcased by Woodward at I/O ), and the AGI trajectory promised by Google offers a path to potentially significant innovation.

- Prepare for a new interaction paradigm: A successful “universal assistant” for Google would entail a brand-new service and data interface. Enterprises should strategize for integration via APIs and agentic frameworks for context-aware delivery.

- Factor in the long-term commitment ( and its risks ): Keeping up with Google’s vision is a long-term commitment. The full “world model” and AGI are potentially distant horizons. Decision-makers must balance these with current requirements and platform complexity.

- Contrast with focused alternatives: Pragmatic solutions from Microsoft offer tangible enterprise productivity now. Another distinct path is provided by disruptive hardware-AI from OpenAI/IO. A diversified strategy, leveraging the best of each, often makes sense, especially with the increasingly open agentic web allowing for such flexibility.

At VentureBeat’s Transform 2025 discussion, which will cover these nuanced choices and real-world AI adoption strategies, will be of particular interest. The leading independent event brings enterprise technical decision-makers together with leaders from pioneering companies to share firsthand experiences on platform choices – Google, Microsoft, and beyond – and navigating AI deployment, all curated by the VentureBeat editorial team. Early registration is advised because there is limited seating.

Google’s defining offensive: shaping the future or strategic overreach?

Google’s I/O spectacle was a powerful example of how Google intends to design and manage the foundational intelligence of the AI-driven future. Its pursuit of a “world model” and its AGI ambitions aim to redefine computing, outflank competitors, and secure its dominance. The technological potential is enormous, and the audacity is astounding.

The big question is execution and timing. Can Google quickly innovate and meld its diverse technologies into a single, compelling experience as its rivals bolster their positions? Can it do so while transforming search and navigating regulatory challenges? And can it do so with such a broad focus on both business and consumers, which is arguably much wider than that of its main rivals?

The next few years will be pivotal. If Google fulfills its “world model” vision, it could usher in a time when personalized, ambient intelligence is created, effectively transforming it into the new operational layer of our digital lives. If not, its grand ambition could be a cautionary tale of a giant reaching for everything, only to find the future defined by others who aimed more specifically, more quickly.  ,