Everywhere you look, people are talking about Artificial providers like they’re only a quick apart from replacing entire agencies. The desire is charming: Autonomous systems that can handle everything you throw at them, no scaffolding, no constraints, simply give them your AWS qualifications and they’ll address all your troubles. But the reality is that ’s simply not how the world works, especially not in the business, where reliability is n’t extra.

Even if an agent is 99 % accurate, that ’s not often good enough. If it ’s optimizing food supply pathways, that means one out of every hundred directions ends up at the wrong target. In a business perspective, that kind of loss level is n’t satisfactory. It’s cheap, dangerous and hard to explain to a client or regulation.

In real-world surroundings like funding, medical and procedures, the AI systems that truly deliver value don’t seem anything like these border fantasies. They aren’t improvising in the open earth; they’re solving well-defined issues with evident sources and predictable results.

If we keep chasing open-world issues with half-ready technologies, we’ll lose time, money and confidence. But if we focus on the problems straight in front of us, the people with obvious ROI and apparent boundaries, we can create AI work now.

This article is about cutting through the publicity and developing AI agents that really ship, work and support.

The trouble with the available world publicity

The tech market loves a launchpad ( and for the record, I do to ). Right now, the launchpad is open-world AI — agents that can handle everything, adapt to new situations, study on the fly and operate with imperfect or confusing information. It’s the dream of general intelligence: Systems that can not only reason, but improvise.

What makes a problem “open world”?

Open-world problems are defined by what we don’t know.

More formally, drawing from research defining these complex environments, a fully open world is characterized by two core properties :

- Time and space are unbounded: An agent’s past experiences may not apply to new, unseen scenarios.

- Tasks are unbounded: They aren’t predetermined and can emerge dynamically.

In such environments, the AI operates with incomplete information; it cannot assume that what is n’t known to be true is false, it ’s simply unknown. The AI is expected to adapt to these unforeseen changes and novel tasks as it navigates the world. This presents an incredibly difficult set of problems for current AI capabilities.

Most enterprise problems aren’t like this

In contrast, closed-world problems are ones where the scope is known, the rules are clear and the system can assume it has all the relevant data. If something is n’t explicitly true, it can be treated as false. These are the kinds of problems most businesses actually face every day: invoice matching, contract validation, fraud detection, claims processing, inventory forecasting.

| Feature | Open world | Closed world |

| Scope | Unbounded | Well-defined |

| Knowledge | Incomplete | Complete ( within domain ) |

| Assumptions | Unknown ≠ false | Unknown = false |

| Tasks | Emergent, not predefined | Fixed, repetitive |

| Testability | Extremely hard | Well-bounded |

These aren’t the use cases that typically make headlines, but they’re the ones businesses actually care about solving.

The risk of hype and inaction

However, the hype is harmful: By setting the bar at open-world general intelligence, we make enterprise AI feel inaccessible. Leaders hear about agents that can do everything, and they freeze, because they don’t know where to start. The problem feels too big, too vague, too risky.

It’s like trying to design autonomous vehicles before we’ve even built a working combustion engine. The dream is exciting, but skipping the fundamentals guarantees failure.

Solve what’s right in front of you

Open-world problems make for great demos and even better funding rounds. But closed-world problems are where the real value is today. They’re solvable, testable and automatable. And they’re sitting inside every enterprise, just waiting for the right system to tackle them.

The question is n’t whether AI will solve open-world problems eventually. The question is: What can you actually deploy right now that makes your business faster, smarter and more reliable?

What enterprise agents actually look like

When people imagine Agenți IA today, they tend to picture a chat window. A user types a prompt, and the agent responds with a helpful answer ( maybe even triggers a tool or two ). That’s fine for demos and consumer apps, but it ’s not how enterprise AI will actually work in practice.

In the enterprise, most useful agents aren’t user-initiated, they’re autonomous.

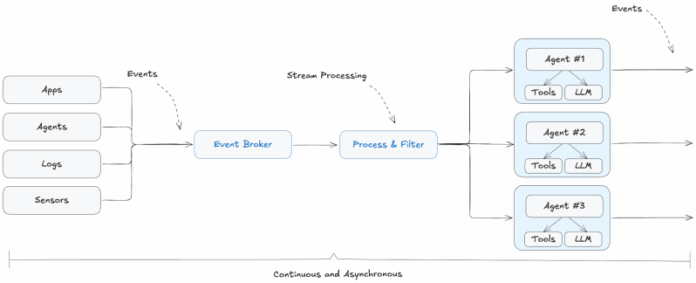

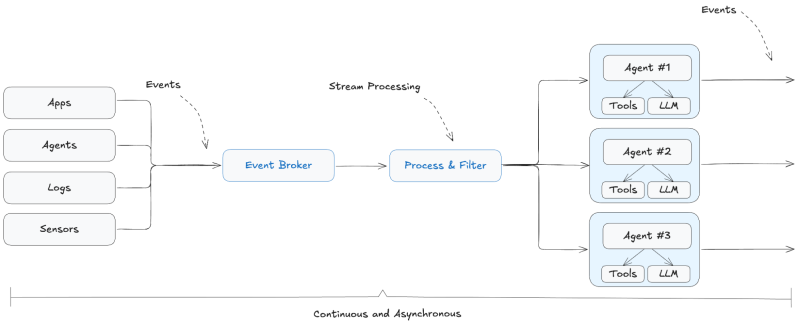

They don’t sit idly waiting for a human to prompt them. They’re long-running processes that react to data as it flows through the business. They make decisions, call services and produce outputs, continuously and asynchronously, without needing to be told when to start.

Imagine an agent that monitors new invoices. Every time an invoice lands, it extracts the relevant fields, checks them against open purchase orders, flags mismatches and either routes the invoice for approval or rejection, without anyone asking it to do so. It just listens for the event ( “new invoice received” ) and goes to work.

Or think about customer onboarding. An agent might watch for the moment a new account is created, then kick off a cascade: verify documents, run know-your-customer ( KYC ) checks, personalize the welcome experience and schedule a follow-up message. The user never knows the agent exists. It just runs. Reliably. In real time.

This is what enterprise agents look like:

- They’re event-driven: Triggered by changes in the system, not user prompts.

- They’re autonomous: They act without human initiation.

- They’re continuous: They don’t spin up for a single task and disappear.

- They’re mostly asynchronous: They work in the background, not in blocking workflows.

You don’t build these agents by fine-tuning a giant model. You build them by wiring together existing models, tools and logic. It’s a software engineering problem, not a modeling one.

At their core, enterprise agents are just modern microservices with intelligence. You give them access to events, give them the right context and let a language model drive the reasoning.

Agent = Event-driven microservice + context data + LLM

Done well, that ’s a powerful architectural pattern. It’s also a shift in mindset. Building agents is n’t about chasing artificial general intelligence ( AGI ). It’s about decomposing real problems into smaller steps, then assembling specialized, reliable components that can handle them, just like we’ve always done in good software systems.

We’ve solved this kind of problem before

If this sounds familiar, it should. We’ve been here before.

When monoliths could n’t scale, we broke them into microservices. When synchronous APIs led to bottlenecks and brittle systems, we turned to event-driven architecture. These were hard-won lessons from decades of building real-world systems. They worked because they brought structure and determinism to complex systems.

I worry that we’re starting to forget that history and repeat the same mistakes in how we build AI.

Because this is n’t a new problem. It’s the same engineering challenge, just with new components. And right now, enterprise AI needs the same principles that got us here: clear boundaries, loose coupling and systems designed to be reliable from the start.

AI models are not deterministic, but your systems can be

The problems worth solving in most businesses are closed-world: Problems with known inputs, clear rules and measurable outcomes. But the models we’re using, especially LLMs, are inherently non-deterministic. They’re probabilistic by design. The same input can yield different outputs depending on context, sampling or temperature.

That’s fine when you’re answering a prompt. But when you’re running a business process? That unpredictability is a liability.

So if you want to build production-grade AI systems, your job is simple: Wrap non-deterministic models in deterministic infrastructure.

Build determinism around the model

- If you know a particular tool should be used for a task, don’t let the model decide, just call the tool.

- If your workflow can be defined statically, don’t rely on dynamic decision-making, use a deterministic call graph.

- If the inputs and outputs are predictable, don’t introduce ambiguity by overcomplicating the agent logic.

Too many teams are reinventing runtime orchestration with every agent, letting the LLM decide what to do next, even when the steps are known ahead of time. You’re just making your life harder.

Where event-driven multi-agent systems shine

Event-driven multi-agent systems break the problem into smaller steps. When you assign each one to a purpose-built agent and trigger them with structured events, you end up with a loosely coupled, fully traceable system that works the way enterprise systems are supposed to work: With reliability, accountability and clear control.

And because it ’s event-driven:

- Agents don’t need to know about each other. They just respond to events.

- Work can happen in parallel, speeding up complex flows.

- Failures are isolated and recoverable via event logs or retries.

- You can observe, debug and test each component in isolation.

Don’t chase magic

Closed-world problems don’t require magic. They need solid engineering. And that means combining the flexibility of LLMs with the structure of good software engineering. If something can be made deterministic, make it deterministic. Save the model for the parts that actually require judgment.

That’s how you build agents that don’t just look good in demos but actually run, scale and deliver in production.

Why testing is so much harder in an open world

One of the most overlooked challenges in building agents is testing, but it is absolutely essential for the enterprise.

In an open-world context, it ’s nearly impossible to do well. The problem space is unbounded so the inputs can be anything, the desired outputs are often ambiguous and even the criteria for success might shift depending on context.

How do you write a test suite for a system that can be asked to do almost anything? You can’t.

That’s why open-world agents are so hard to validate in practice. You can measure isolated behaviors or benchmark narrow tasks, but you can’t trust the system end-to-end unless you ’ve somehow seen it perform across a combinatorially large space of situations, which no one has.

In contrast, closed-world problems make testing tractable. The inputs are constrained. The expected outputs are definable. You can write assertions. You can simulate edge cases. You can know what “correct” looks like.

And if you go one step further, decomposing your agent’s logic into smaller, well-scoped components using an event-driven architecture, it gets even more tractable. Each agent in the system has a narrow responsibility. Its behavior can be tested independently, its inputs and outputs mocked or replayed, and its performance evaluated in isolation.

When the system is modular, and the scope of each module is closed-world, you can build test sets that actually give you confidence.

This is the foundation for trust in production AI.

Building the right foundation

The future of AI in the enterprise does n’t start with AGI. It starts with automation that works. That means focusing on closed-world problems that are structured, bounded and rich with opportunity for real impact.

You don’t need an agent that can do everything. You need a system that can reliably do something:

- A claim routed correctly.

- A document parsed accurately.

- A customer followed up with on time.

Those wins add up. They reduce costs, free up time and build trust in AI as a dependable part of the stack.

And getting there does n’t require breakthroughs in prompt engineering or betting on the next model to magically generalize. It requires doing what good engineers have always done: Breaking problems down, building composable systems and wiring components together in ways that are testable and observable.

Event-driven multi-agent systems aren’t a silver bullet, they’re just a practical architecture for working with imperfect tools in a structured way. They let you isolate where intelligence is needed, contain where it ’s not and build systems that behave predictably even when individual parts don’t.

This is n’t about chasing the frontier. It’s about applying basic software engineering to a new class of problems.

Sean Falconer is Confluent’s AI entrepreneur in residence.