A new method has been developed by the Japanese AI lab Sakana AI that enables multiple large language models ( LLMs) to work together on a single task, effectively creating a “dream team” of AI agents. Models can do trial-and-error and mix their distinct strengths to solve problems that are too difficult for any single unit using the method, called Multi-LLM AB-MCTS.

This method provides a means to create more powerful and adaptable AI systems for businesses. Businesses could automatically use the best elements of various frontier models, putting the proper AI in place for the right task to deliver superior results instead of being restricted to a single provider or model.

The influence of social intelligence

Frontier AI designs are constantly evolving. Each unit, however, has its own unique advantages and disadvantages, each one of which are derived from its own particular coaching data and architecture. One may excel at coding while another might excel at writing creatively. These variations are not a spider, but a function, claim Sakana AI’s researchers.

The researchers write in their site post that” we see these prejudices and varied abilities not as restrictions, but as valuable resources for creating shared intelligence.” They think that AI systems can accomplish more by working up, just as humans ‘ greatest accomplishments may be attributable to various groups. By combining their brains, AI systems can resolve issues that are beyond the reach of any one model.

Thinking more during conclusion

The new engine from Sakana AI is an “inference-time scaling” approach ( also known as “test-time scaling,” or “test-time scaling,” which has become very popular recently. Inference-time scaling improves performance by distributing more computational resources after a model has been trained, whereas the majority of AI research has been focused on” training-time scaling” ( making models bigger and training them on larger datasets ).  ,

As seen in popular models like OpenAI o3 and DeepSeek-R1, one common approach is to use reinforcement learning to prompt models to generate longer, more detailed chain-of-thought ( CoT ) sequences. Repeat sampling is another simpler technique, in which the unit is repeatedly given the same quick to come up with a number of possible answers, similar to a brainstorming program. These ideas are combined and furthered by Sakana AI’s job.

According to Takuya Akiba, research scientist at Sakana AI and co-author of the report, “our foundation offers a smarter, more corporate type of Best-of-N (aka repeated picking ). It enhances logic strategies like long CoT and RL, for example. This method maximizes performance in a limited quantity of LLM calls by automatically selecting the search strategy and the correct LLM, resulting in better results on difficult tasks.

How does responsive spreading search function

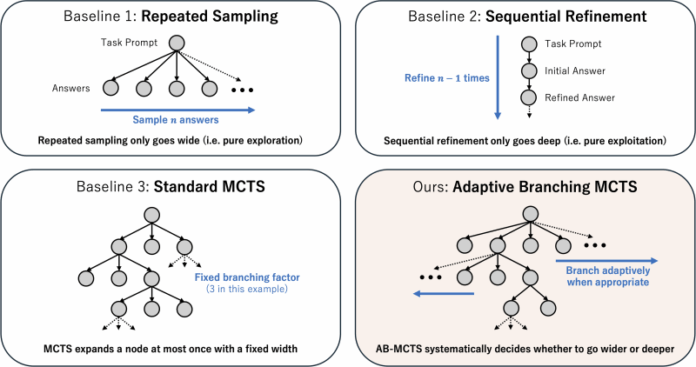

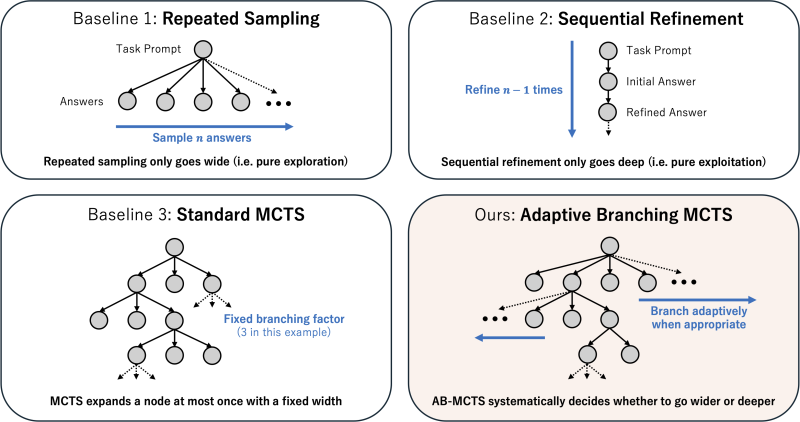

An algorithm known as Adaptive Branching Monte Carlo Tree Search ( AB-MCTS ) forms the foundation of the new method. It enables an LLM to successfully carry out trial-and-error by effectively balancing two distinct search techniques:” searching wider” and” searching deeper.” Searching deeper involves constantly refining a tempting response, while searching wider results in creating entirely new answers from damage. When a good idea comes to an end, the structure can hinge and try something new if it comes to a dead end or finds a new direction with AB-MCTS.

The method makes use of Monte Carlo Tree Search (MCTS), a well-known decision-making algorithms used by DeepMind’s AlphaGo, to accomplish this. AB-MCTS evaluates each step using probability models to determine whether it is better to develop a new solution or to develop an existing one.

Multi-LLM AB-MCTS, the researchers ‘ further investigation, decides “what” to do ( refine vs. generate ), as well as “which” LLM should do it. The system is unsure which type is most appropriate for the trouble at the beginning of a task. It begins by attempting a healthy mix of the available LLMs, then gradually learns which versions are more powerful, giving each one more of the load over time.

Putting the “dream group” of AI to the exam

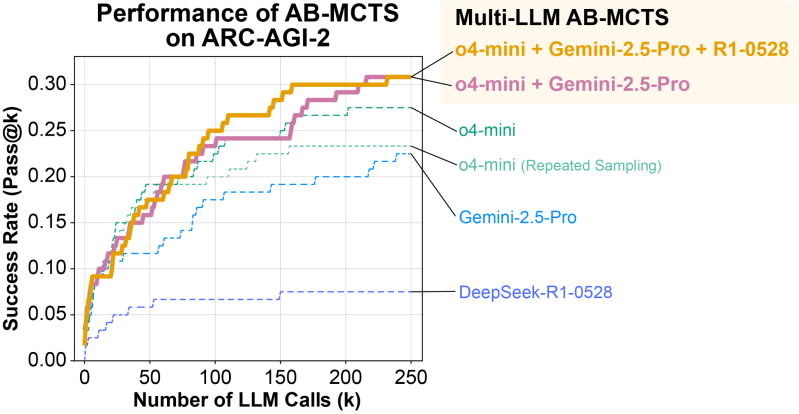

The scientists tested the Multi-LLM AB-MCTS program using the ARC-AGI-2 standard. Making it notoriously challenging for AI, ARC ( Abstraction and Reasoning Corpus ) is designed to test a human-like ability to solve novel visual reasoning problems.  ,

The crew used a number of border models, including the Gemini 2.5 Pro, DeepSeek-R1, and o4-mini.

Over 30 % of the 120 check difficulties were resolved by the group of models, which was considerably higher than any model performed by itself. The technique demonstrated the ability to automatically select the most appropriate model for a particular problem. The engine immediately identified the most effective LLM and used it more often on jobs where a clear way to a alternative existed.

The group also discovered situations where the designs solved problems that were previously impossible for any one of them, which is even more impressive. A solution created by the o4-mini model was wrong in one instance. The system, however, gave this subpar try to DeepSeek-R1 and Gemini-2.5 Pro, who were able to identify the flaw, right it, and eventually come up with the correct answer.  ,

The researchers write,” This shows that Multi-LLM AB-MCTS you freely mix frontier models to resolve recently insoluble problems, pushing the boundaries of what can be accomplished by using LLMs as a social intelligence.”

The inclination to hallucinate may differ considerably between models, Akiba said, in addition to the unique advantages and disadvantages of each. The best of both worlds could be achieved by creating an ensemble with a design that is less likely to hallucinate, namely solid natural abilities and solid groundedness. This approach may help with its mitigation because hallucination is a pressing problem in the business world.

From theoretical analysis to practical uses

Sakana AI has released the main engine as an open-source foundation called TreeQuest, applicable under an Apache 2.0 permit (usable for business purposes ). To aid developers and businesses use this method, Sakana AI has released the main algorithm as an open-source framework called TreeQuest. With custom ranking and logic, users can use Multi-LLM AB-MCTS for their own duties using TreeeQuest’s versatile API.

Our analysis reveals significant potential in a number of areas, Akiba said, even though we are still in the early stages of applying AB-MCTS to certain business-oriented issues.  ,

Beyond the ARC-AGI-2 standard, the team was able to successfully implement AB-MCTS to things like improving machine learning models ‘ accuracy and challenging complex algorithmic programming.  ,

” AB-MCTS could also be very effective for issues that call for iterative trial-and-error, such as increasing performance metrics of existing application,” Akiba said. It might be used to quickly determine ways to increase a net service’s response overhead, for instance.

A new generation of more potent and trustworthy enterprise AI applications may be created with the release of a realistic, open-source tool.